The Latest Motion Control & Gesture Recognition Technologies

Introduction: The Touchless Revolution Has Arrived

In a world increasingly shaped by automation and human–machine interaction, motion control and gesture recognition are no longer futuristic concepts — they’re integral to everyday life. From gaming and AR/VR headsets to autonomous vehicles and healthcare robotics, motion control technology bridges the physical and digital worlds.

By 2025, gesture-based systems are evolving faster than ever, fueled by breakthroughs in AI, computer vision, edge processing, and sensor fusion. The global gesture recognition and motion control market has already crossed $30 billion in value, and it’s projected to double within the next few years.

In this blog, we’ll explore the latest trends, technologies, and applications defining motion control and gesture recognition today — and what the next wave of innovation means for consumers, developers, and industries alike.

1. What Is Motion Control & Gesture Recognition?

Motion Control

Motion control is the technology that allows machines to interpret, track, and respond to physical movement. It’s the foundation behind robotics, industrial automation, and precision systems. A typical motion control system consists of:

- Sensors (to detect motion or position)

- Controllers (to process inputs)

- Actuators (to produce movement)

- Feedback loops (for accuracy)

Modern motion control goes beyond motors — it includes AI algorithms, MEMS sensors, IMUs, and camera-based systems that make devices more responsive and adaptive.

Gesture Recognition

Gesture recognition focuses on interpreting human gestures — movements of hands, face, or body — to command or interact with digital systems. It uses sensors like cameras, radar, or depth detectors to analyze and classify gestures in real time.

Examples:

- Swiping your hand to skip a song

- Nodding to control a wearable headset

- Waving to activate a smart TV

Together, gesture and motion control tech represent a new era of human-centered computing — where devices understand human movement naturally, without touchscreens or buttons.

2. The Evolution: From Wii Controllers to AI-Powered Motion Interfaces

The story of gesture recognition began in the early 2000s with gaming systems like Nintendo Wii and Microsoft Kinect, which used infrared and RGB cameras to track body movements. Since then, technology has advanced dramatically:

| Generation | Era | Core Technology | Example Use Cases |

|---|---|---|---|

| 1st Gen | 2005–2010 | Infrared tracking | Nintendo Wii, early VR |

| 2nd Gen | 2010–2015 | RGB + depth sensors | Microsoft Kinect, Leap Motion |

| 3rd Gen | 2015–2020 | Computer vision + ML | AR/VR hand tracking |

| 4th Gen | 2020–2025 | AI + sensor fusion + radar | Automotive, healthcare, industrial robotics |

The jump from 3D cameras to AI-enhanced sensor fusion has unlocked gesture recognition that’s faster, more accurate, and usable even in low-light or noisy environments.

3. Key Components Driving Motion Control Systems

a) Sensors

Modern systems combine several sensor types:

- Inertial Measurement Units (IMUs): measure acceleration and angular rate.

- LiDAR & ToF (Time-of-Flight) sensors: create 3D maps of the environment.

- Millimeter-wave radar: useful for short-range gesture detection in all lighting.

- Optical cameras: track hand, face, and body landmarks.

- Ultrasound & capacitive sensors: detect motion proximity for wearables.

b) Controllers

Microcontrollers (MCUs), DSPs, and AI edge processors handle data streams from sensors. Platforms like NVIDIA Jetson, Google Coral, and Qualcomm Snapdragon XR are popular in AI-driven motion systems.

c) Algorithms & AI

Deep learning models interpret gestures from noisy sensor inputs. Techniques include:

- CNNs for image-based gesture classification

- RNNs and Transformers for sequence recognition

- Sensor fusion algorithms to combine radar + vision + IMU data

d) Actuators

In motion control (especially robotics), actuators execute commands through motors, servos, or pneumatics — with feedback ensuring precision and repeatability.

4. Cutting-Edge Trends in Motion Control & Gesture Tech

Let’s break down the major 2025 trends transforming the landscape:

1. AI-Powered Gesture Recognition

AI is the heart of modern gesture interfaces. Systems now use deep neural networks to detect gestures with sub-50ms latency. AI enables devices to learn personalized gestures and adapt to different users and contexts.

Example:

The latest AR glasses use AI hand-tracking to recognize micro-gestures (like a pinch or flick) without requiring gloves or sensors.

2. Touchless Interfaces in Public & Healthcare Environments

COVID-19 accelerated demand for contactless controls in hospitals, airports, and cars. Gesture-based systems allow people to interact with screens, elevators, and kiosks without touching shared surfaces.

Example:

- In hospitals, surgeons use gesture-controlled medical displays to review scans mid-surgery without breaking sterility.

- In vehicles, BMW’s iDrive system uses gesture controls for volume, calls, and navigation.

3. 3D Motion Sensing with LiDAR and ToF Cameras

3D sensing gives devices depth perception, enabling accurate motion tracking in space. Time-of-Flight (ToF) and structured light systems are common in smartphones, AR/VR headsets, and robotics.

Advantages:

- Works in any lighting

- Captures real-world dimensions

- Enables precise hand-tracking and environmental awareness

Applications:

- AR/VR motion tracking

- Smart home devices

- Industrial safety systems

4. Radar-Based Gesture Control

Radar technology, such as Google Soli (using 60GHz radar), detects micro-motions at millimeter precision. Unlike cameras, radar is privacy-friendly and works through fabrics and in the dark.

Use cases:

- Smartwatches that sense hand gestures even when the screen is off

- Automotive gesture sensors for infotainment control

- Touchless smart speakers

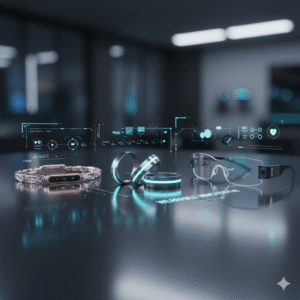

5. Wearable Gesture Control

Smart wearables — gloves, rings, bands — are evolving into gesture input devices. Equipped with IMUs, flex sensors, and electromyography (EMG), they detect muscle signals and motion for intuitive control.

Examples:

- Myo Armband interprets muscle activity to control drones and presentations

- Apple Watch gestures like “double-tap” (detected via sensors)

- VR gloves offering haptic feedback for full-hand immersion

6. Haptics & Feedback Integration

Gesture recognition is becoming bi-directional — not only can machines read gestures, but they can respond via haptic feedback.

Innovations:

- Ultrasonic mid-air haptics that simulate touch on your palm

- Vibration motors and electrostatic surfaces for realistic texture feedback

- Tactile gloves in VR that recreate touch and resistance

7. Edge Computing for Low-Latency Processing

Instead of sending motion data to the cloud, edge processors analyze gestures locally, reducing latency and protecting user privacy. This is crucial for:

- Automotive safety systems

- AR/VR headsets

- Industrial robots

Edge AI chips from Intel, Qualcomm, and NVIDIA are pushing gesture latency below 10 milliseconds.

8. Motion Control in Robotics & Industry 4.0

In manufacturing and logistics, motion control ensures precision, speed, and coordination. Robots now use advanced control loops and machine learning for:

- Path optimization

- Collision avoidance

- Adaptive torque control

Example:

Collaborative robots (“cobots”) that respond to operator gestures or body movement for safe, intuitive control.

9. Motion Tracking in AR/VR & Metaverse Applications

The metaverse depends on realistic human motion capture. Cameras, gloves, and sensors map users’ hands and bodies into virtual spaces.

Recent trends:

- Inside-out tracking (headset cameras instead of external sensors)

- AI motion prediction for smoother avatars

- Full-body motion suits for gaming and industrial training

10. Gesture Control in Automotive Systems

The automotive sector is a leader in gesture innovation. Cars now respond to in-air gestures for infotainment, navigation, and safety alerts.

Technologies involved:

- ToF sensors in dashboards

- Radar-based motion detection for driver monitoring

- AI algorithms detecting driver fatigue or distraction

Major players: BMW, Mercedes-Benz, Tesla, Sony, and Hyundai are investing heavily in in-cabin gesture systems.

5. Emerging Technologies Powering Gesture Recognition

1. Computer Vision

AI-driven computer vision detects and classifies gestures using RGB and depth images. Algorithms can track over 21 hand keypoints, facial landmarks, and full-body skeletons.

2. Deep Learning Models

Pretrained models like MediaPipe Hands, OpenPose, and YOLO-based architectures are used in real-time gesture classification.

3. Sensor Fusion

Combining multiple inputs (camera, radar, IMU) ensures robust accuracy, especially in noisy or occluded environments.

4. Neural Interfaces

A cutting-edge trend: using brain and muscle signals to enhance gesture recognition. Neural wristbands interpret EMG data to understand intent — even before visible motion occurs.

6. Major Industry Applications

| Industry | Applications | Benefits |

|---|---|---|

| Automotive | In-cabin control, driver monitoring | Safety, distraction reduction |

| Healthcare | Touchless surgery tools, physical therapy | Hygiene, rehabilitation |

| Gaming/AR/VR | Immersive controls | Natural interaction |

| Manufacturing | Robot control, quality assurance | Efficiency, safety |

| Smart Homes | Appliance control, gesture lighting | Convenience, accessibility |

| Retail & Kiosks | Touchless checkout | Hygiene, user engagement |

| Security | Gesture-based authentication | Enhanced privacy |

7. Top Companies and Innovators in Gesture Technology (2025)

- Google — Soli radar sensor for pixel devices and smartwatches

- Apple — Gesture control integration in Apple Watch and Vision Pro

- Sony — Advanced motion tracking for PlayStation VR2

- Microsoft — Kinect’s evolution for industrial & medical use

- Ultraleap — Mid-air haptics and 3D gesture recognition

- Qualcomm & Intel — AI edge processors for motion control

- Tesla & BMW — Gesture-controlled infotainment systems

- Samsung & LG — Smart TVs and appliances with gesture UI

- Nordic Semiconductor — Low-power motion sensors

- Leap Motion (Ultraleap) — Precision hand-tracking hardware for XR

8. Challenges in Motion Control & Gesture Systems

Despite rapid progress, key challenges remain:

- Lighting & Environmental Factors — Vision systems struggle in glare or darkness (mitigated by radar/ToF).

- Power Consumption — Continuous sensing drains battery on wearables.

- Privacy Concerns — Cameras and sensors raise data security issues.

- Gesture Fatigue — Repetitive movements can be tiring; UX design must consider ergonomics.

- Standardization — Lack of universal gesture language limits cross-platform usability.

- Cost of Precision Sensors — High-end LiDAR and radar modules increase BOM costs.

- Processing Latency — Real-time gesture recognition requires high-speed AI chips.

9. Future Outlook: The Next 5 Years of Motion & Gesture Technology

Here’s what’s coming next:

1. Emotion Recognition

Systems that detect not only movement but emotion — combining gesture, posture, and facial analysis.

2. Neural Motion Interfaces

Gesture input merged with brain–computer interface (BCI) signals, allowing control through thought and micro-movements.

3. Advanced Edge AI

With AI chips built into every device, gesture recognition will become universal — from watches to appliances.

4. Haptic Holograms

Combining motion tracking with mid-air haptics to let users feel virtual objects in AR environments.

5. Universal Gesture Language

Industry-wide collaboration may lead to standardized gestures (like sign language for machines).

6. Smart Robotics

Gesture-driven robots in healthcare, logistics, and eldercare that interpret commands naturally.

7. Automotive Personalization

Cars that learn individual drivers’ preferred gestures and habits, adapting automatically.

10. FAQs

Q1. What is gesture recognition technology?

Gesture recognition is the use of sensors, cameras, or radar to detect and interpret human gestures — such as hand waves, head nods, or body movements — allowing control of devices without touch.

Q2. Where is motion control used?

Motion control is used in robotics, manufacturing, drones, AR/VR systems, smart homes, and automotive systems to enable responsive and precise movement tracking.

Q3. What sensors are used in gesture technology?

Common sensors include infrared cameras, radar, LiDAR, ultrasonic sensors, IMUs, and electromyography (EMG) sensors.

Q4. What are the benefits of gesture control?

Gesture control provides hands-free interaction, enhances hygiene, improves accessibility, and enables more immersive digital experiences.

Q5. How does AI enhance motion control?

AI improves motion control by learning complex movement patterns, predicting user intent, and reducing false positives in gesture detection.

Q6. What is the difference between motion tracking and gesture recognition?

Motion tracking monitors physical movement in space, while gesture recognition interprets those movements as specific commands or actions.

11. Case Studies & Real-World Implementations

Healthcare Example

Hospitals use AI motion cameras in operating rooms to let surgeons flip through images using simple hand waves, maintaining sterility and efficiency.

Automotive Example

The BMW 7 Series allows drivers to control infotainment functions via mid-air gestures detected by infrared and ToF sensors.

Gaming Example

Sony’s PlayStation VR2 uses infrared cameras and IMUs to deliver lifelike motion capture for immersive gameplay.

Smart Home Example

LG’s ThinQ appliances respond to wave gestures for control — ideal for kitchens or bathrooms where hands are often occupied.

12. Business & Market Growth

The gesture recognition and motion control market is growing at a CAGR of over 20%, with the biggest adoption in:

- Consumer electronics (smartphones, TVs, wearables)

- Automotive (driver monitoring, infotainment)

- Industrial automation

- AR/VR

- Healthcare

By 2030, the market is expected to surpass $70 billion, driven by AI integration and lower sensor costs.

13. Ethical Considerations and Data Privacy

As gesture technology uses cameras and sensors to track human movement, developers must prioritize:

- Data minimization — collecting only what’s necessary

- On-device processing — avoiding unnecessary cloud storage

- Transparent user consent — explaining data use clearly

- AI fairness — ensuring models work across diverse demographics

Privacy compliance with frameworks like GDPR and CCPA is becoming mandatory for gesture-enabled products.

14. The Future User Experience

In the near future, human–computer interaction will feel invisible. Imagine:

- Opening your laptop with a wave

- Adjusting your car’s air conditioning with a hand flick

- Controlling AR content with finger pinches in the air

- Robots that understand sign language

The boundary between humans and machines is dissolving — and gesture recognition is at the center of that transformation.

15. Conclusion: A Natural Way to Control Technology

Gesture and motion control technology redefine how humans interact with the digital world. Powered by AI, advanced sensors, and edge computing, it is enabling a future where technology responds to you — not the other way around.

From autonomous vehicles to AR experiences, from industrial robots to healthcare devices, gesture recognition is the foundation of natural, intuitive, and safe human–machine interfaces.

As costs drop and accuracy improves, expect to see gesture control in everything — your smartwatch, car, kitchen, and even public kiosks. The ultimate goal is seamless interaction: no buttons, no remotes, no screens — just motion, intent, and connection.

Share this content:

Post Comment